In between illegal and harmful: a look at the Community Guidelines and Terms of Use of online platforms in the light of the DSA proposal and the fundamental right to freedom of expression (Part 2 of 3)

By Britt van den Branden, Sophie Davidse and Eva Smit*

This blog is part two of three. See part 1 here. Part 3 will follow soon.

In part one of this blog series, we showed that the Community Guidelines and Terms of Use (hereafter: CG and ToU) of six well known very large online platforms (YouTube, Twitter, Snapchat, Instagram, TikTok and Facebook) differ significantly in the way they identify and tackle several categories of harmful content. In this part, the theory will be put into practice by comparing those findings to two case studies: one relating to former US president Donald J. Trump and the storming of the Capitol, and of the other to the conspiracy theory documentary called Plandemic.

Case study of Trump and the storming of the Capitol

On the 6th of January 2021, people around the world were glued to their TV as supporters of former US president Donald Trump stormed the Capitol in Washington D.C. They were swept up and initially supported by Trump, who had proclaimed the USA’s elections that he had just lost to Biden had been fraudulent. His most important pulpit: online platforms.

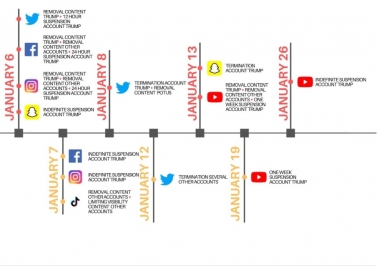

Why does this event lend itself so well to be looked at as a case study? In the days after the 6th of January, all researched platforms took some form of action against either content placed by Trump or on his accounts in general. To illustrate this, a reconstruction of the actions taken by the platforms has been made in the form of a timeline. What the timeline mostly indicates is that a domino-effect took place: once the first platform had taken action, the gates were opened and all of them followed in their own way. The severity of the measures still differed somewhat.

The platforms formally took action to stop the spreading of misinformation. This allows us to compare the restrictions mentioned in the CGs and ToUs (see the comparative tables in the first part of this blog series) to the enforcement actions taken by the platforms. Could the general users of the online platforms have predicted that these types of actions would be taken, based on their CGs and ToUs? What also makes this case study interesting, is that Trump was a political figure at the time. This should be taken into account when discussing the boundaries of his freedom of speech and the implications for the freedom of expression of his supporters online. The European Court of Human Rights has ruled that politicians should tolerate a higher degree of criticism due to their public role in a democratic society. At the same time, the Court has also stressed the importance of freedom of expression for elected representatives, by stating that “interferences with the freedom of expression of an opposition member of parliament […] call for the closest scrutiny on the part of the Court.”

It should however also be noted that many factors played a role in the actions taken by the platforms. As can be seen in the timeline, a domino effect took place: platforms followed suit once the first had taken action. This leads us to believe that public opinion and the risk of being accused of not doing enough, also played a significant role in the pace and severity of the actions taken by the platforms. Other additional elements are undoubtedly also important, such as for example the contextual knowledge that, on the 6th of January, Biden had already been proclaimed the winner of the elections and that there was no evidence of any election fraud.

It is also important to observe that the storming of the Capitol and the subsequent actions taken by the platforms took place in an American setting and jurisdiction, while our research and recommendations are confined to the European legislation on freedom of expression and the DSA. This however does not affect the analysis of the actions taken by the platforms in response to the events that happened in January based on the CGs and ToUs of the platforms, as these contractual terms apply globally.

The actions taken by the platforms are based on their CGs and ToUs on mis- and disinformation. Incidentally, when looking at the comparative tables as presented in the first part of this blog series, this is also the category that lacks most clarity. Often the definition is unclear, resulting in consequent vagueness about which enforcement initiative will be adopted when. This is for example the case for Instagram and Facebook: although their CGs and ToUs make no specific mention of moderation practices regarding content that could be seen as civic manipulation, these platforms took action on the basis of their misinformation policy.

This offers two main insights to our case study. Firstly, it is unclear why the platforms used the enforcement types that they opted for. When looking at table 2 of the comparative tables presented in the first part of this blog series, we see that most enforcement types that have been used are listed by the platforms in their CG and ToU, and are therefore up for use. However, it remains ambiguous which specific kind of enforcement action will be taken against a specific piece of content. Secondly, the reason given by the platforms for stopping the spread of misinformation is problematic. Because a concrete definition is lacking in the CGs and ToUs, platforms have ample discretion in labelling content as misinformation. This creates uncertainty and more importantly a threat to the freedom of expression of the users of the platforms.

The goal of the CGs and ToUs should be that users can rely on them to know what content is protected by their freedom of expression and what is not. Without this basic knowledge, it becomes difficult for them to contest decisions taken by the platforms, as provided for under the DSA proposal. But could users of the platforms, in this particular instance, have predicted the actions taken against Trump?

On the one hand, it is clear that mis- and disinformation was named in the CGs and ToUs of the platforms, and that the enforcement types that were adopted are mentioned there as well. On the other hand, however, such policies display elements of vagueness: in fact, on the basis of these policies, it could not be predicted what content of Trump would lead to what specific enforcement type. As discussed in our first blogpost, substantive definitions of mis- and disinformation lack or are so broad, that a wide variety of content could be classified as such.

Case study of the Plandemic conspiracy theory documentary

Although the Capitol riots are one of the very few cases where all of our researched platforms took action, it still remains a very unique case. In order to get better insights regarding the misinformation-related actions that the platforms might take in more average situations, we will discuss another case, namely the Plandemic conspiracy theory documentary.

So, what exactly happened? On the 4th of May 2020, a 26-minute video called Plandemic aired on a website specifically set up to share it. The video was subsequently posted on Facebook, Youtube and Twitter. The video forms the first part of a documentary, where an interview is conducted with (discredited) scientist Judy Mikovits. In the video, countless ill-founded claims and accusations are made regarding COVID-19, e.g. (as the title of the film suggests) that the virus must originate from a laboratory. Before the movie was taken down, it circulated widely online and over 8 million people watched it.

Even though the high number of views may suggest that no immediate actions were taken by Facebook, Youtube and Twitter, these platforms tried to minimise the harm caused by the video shared online. At first, Facebook demoted the video, which means that the visibility of the video was lowered. It later decided to remove the content, as the video included suggestions that wearing a mask can make you ill, which has the potential to cause imminent individual and societal harm. Youtube already flagged and removed videos in which the conspiracy theory of the ‘Plandemic’ was discussed, before the emergence of this specific video. Youtube decided to not recommend Plandemic, or make it surface on the homepage. Also, a spokesperson said that Youtube would delete content which includes “medically unsubstantiated diagnostic advice for COVID-19”, and that the Plandemic video falls within this scope. Twitter is the only platform that did not remove the video from its services, as the video does not violate its policy regarding misinformation. It did however block the hashtags #PlagueofCorruption and #PlandemicMovie from its trends and search pages, and the video was marked as unsafe.

When taking a look at our study on the CGs and ToUs of the researched platforms, we can argue that the only platform that did not make any unexpected moves is Facebook. Facebook’s CG and ToU already indicate that limiting the visibility and removal of content is one of their methods to combat harmful content. Youtube also decided to limit the visibility of the conspiracy video by not recommending it or publishing it on the homepage. However, these are enforcement measures which cannot be found in its CG and ToU. Twitter did not remove the video, because it did not meet the requirements for mis- and disinformation for its services. This is rather remarkable, as Twitter’s CG and ToU miss a clear definition of this category and no further clarification is given of why the video did not qualify as such. Nonetheless, the blocking of the hashtags to limit visibility of the content and labelling the video as unsafe are moderation practices that are mentioned.

When there was no room for the video to spread any further on Facebook, Youtube and Twitter, the conspiracy theorists resorted to other tactics. The NGO First Draft found out that clips of the video and the hashtag #Plandemic made its way to TikTok with a peak on the 12th of May 2020, with 62 new videos. This suggests that it could be desirable that very large online platforms take a more uniform approach in content moderation in certain circumstances.

A few months later, on the 18th of August 2020, the second part of the documentary called Plandemic – Indoctornation, was released. Remarkably enough, this video did not receive nearly as much attention as the first part. This might have to do with how different the platforms reacted to this new content compared to the first Plandemic video. A major difference with the release of the first video was that the premier date of this video was announced at least 887 times on Facebook. This enabled the platforms to anticipate their actions before substantial damage could be done. This time, Facebook restricted the sharing of an external link that would lead users of the platform to the full-length documentary. On the contrary, Twitter did not block the link, but rather warned users who clicked on it that the link was potentially unsafe. Moreover, it informed users that if clips of the video were posted on its services, they would be evaluated and potentially removed if Twitter classified them as misinformation. Youtube did not differ in its approach that much, as the full uploads were removed and segments were evaluated on a case-by-case basis before deciding on content removal. TikTok also made it impossible for its users to search the term ‘Plandemic’. Whenever a search was made, a notification would pop up that content linked to that term is in violation with TikTok’s guidelines. However, it is unclear when this measure was implemented and whether it is a direct response to the upload of the full documentary.

We also put under scrutiny the measures taken against this second part of the documentary. Once again, Facebook and Twitter remained true to their policy, by respectively blocking the content and labelling the video. It is interesting to note that Twitter indicated this time around that certain clips could amount to misinformation. However, again no clarity was offered as to why these clips would qualify as misinformation. As Youtube stated it would remove clips and the full-length video after analysing them, they are acting in line with their CG and ToU. Lastly, in contrast to the first video, Tiktok also acted upon the threats posed by the Plandemic documentary. By disabling users from searching the term ‘Plandemic’, the visibility of the content can be limited. However, TikTok’s CG and ToU offer no indications that limiting visibility is an enforcement type employed by the platform.

The above findings have significant implications for the freedom of expression of the users. Without the legal certainty that the CGs and ToUs should offer, users of the platforms remain in the dark as far as the relation is concerned between what content they are allowed to put online and what consequences might follow, or how they can require restrictions on content posted by others. Such a situation is problematic, especially considering their public relevance and the role they play in the public debate.

Whether you agree with the actions taken by the platforms or not, the case studies clearly show that it cannot be concluded with certainty that these actions could have been predicted by their users based on their CG and ToU. To safeguard the freedom of expression of users, it should be a fundamental objective of the DSA proposal to require more transparency on this point. The question remains how to achieve this. This will be the subject of the third and final blog in this series.

Britt van den Branden, Sophie Davidse and Eva Smit are students of the IViR research master in information law and of the “Glushko & Samuelson Information Law and Policy Lab” (ILPLab). For this ILPLab research project, they have worked in partnership with the DSA Observatory and with AWO data rights agency.