In between illegal and harmful: a look at the Community Guidelines and Terms of Use of online platforms in the light of the DSA proposal and the fundamental right to freedom of expression (Part 1 of 3)

By Britt van den Branden, Sophie Davidse and Eva Smit*

This blogpost is part one of three. Blogposts 2 and 3 will follow soon.

Just before last year’s Christmas day, the European Commission published a proposal for a Digital Services Act (DSA). The proposal introduces a new range of harmonised obligations for digital services (including specific obligations for very large online platforms). The draft Regulation requires transparency and accountability from intermediary service providers, especially from very large online platforms as they function as gatekeepers of information. The DSA also includes provisions which give users more control through effective user safeguards, such as the right to challenge platform’s content moderation decisions.

Contrary to the e-Commerce Directive, the DSA puts the protection of freedom of expression at its very core. This is reflected by the choice, as emphasised in its explanatory memorandum, that harmful content should not be defined in the draft Regulation and be subject to removal obligations, as it is “a delicate area with severe implications for the protection of freedom of expression”. Only illegal content appears in the definitions under Article 2 DSA. In Article 2(g) DSA, illegal content is defined as “any information, which, in itself or by its reference to an activity, including the sale of products or provision of services is not in compliance with Union law or the law of a Member State, irrespective of the precise subject matter or nature of that law.” The fact that the DSA defines illegal content only, makes it difficult to define what harmful content is and to draw a clear line between illegal and harmful content. Due to above-mentioned implications on the freedom of expression, the last category has been left to the discretion of intermediary service providers.

The content moderation practices of intermediary service providers, e.g. social media platforms, are governed by their own Community Guidelines and Terms of Use (hereafter: CG and ToU). In Article 2(p) DSA, content moderation is defined as “the activities undertaken by providers of intermediary services aimed at detecting, identifying and addressing illegal content or information incompatible with their terms and conditions.” Article 12 DSA lays down requirements for the terms and conditions, stating that they should provide users with information concerning the procedures, measures and tools used in content moderation. That information should not only be set out in a clear and unambiguous manner, but should also be publicly available and in an easily accessible format. Moreover, platforms are required to act in a diligent, objective, and proportionate way when moderating content, thus keeping in mind the fundamental rights and interests of the parties involved. With no further guidance offered by the DSA on how to deal with harmful content, the platforms still have significant room for self-governance and free play, which subsequently has implications for the online freedom of expression of their users. It is therefore of great importance that the CGs and ToUs are at least sufficiently clear and transparent. In this manner users of the platform can understand and predict when and how their freedom of expression may be limited.

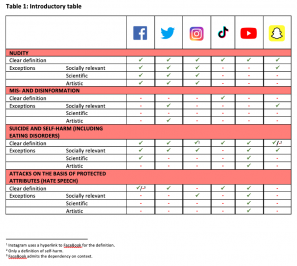

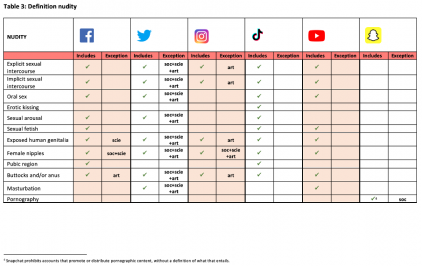

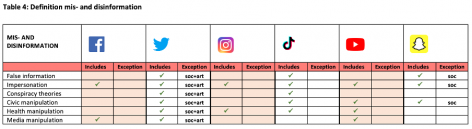

To investigate whether the terms and conditions of very large online platforms are aligned with the requirements of Article 12 DSA regarding the way they deal with harmful content, we have looked at the CGs and ToUs of six well-known very large online platforms: YouTube, Twitter, Snapchat, Instagram, TikTok and Facebook. These platforms all deal with similar problematic content online. We have selected four content categories that do not qualify as explicitly illegal content, but rather as harmful content. These are: 1) nudity, 2) mis- and disinformation, 3) suicide and self-harm (including eating disorders), and 4) attacks on the basis of protected attributes (hate speech). We have compared the CGs and ToUs of these platforms to examine what content they act upon and how their content moderation practices may impact the online freedom of expression of their users. Our research has been summarised in a set of comparative tables

In comparing the CGs and ToUs of the platforms, we found some significant differences. What are the most notable findings? Three things.

Most important findings

Firstly, it appears that in some cases the CGs and ToUs of the very large online platforms do not provide a clear definition for certain categories of content against which action can be taken. As a result, it is not clearly delineated what is and/or could be classified under a certain category and what is not. For example, of the platforms examined, TikTok is the only one which offers a clear definition for disinformation. YouTube on the contrary, does not define at all what qualifies as such.

Secondly, the six platforms also differ in how they say they deal with exceptions to content restrictions. For example, some platforms seem to leave no room for exceptions for content that consists of disinformation, while others recognise the possibility of socially relevant, scientific or artistic exceptions. In the suicide and self-harm categories these differences become most apparent. In the CG and ToU of TikTok no exceptions are found which would allow this type of content under certain circumstances. On the other hand, Twitter, Instagram, and Snapchat could admit suicide and self-harm related content when placed in a context which makes a post socially relevant. Moreover, Facebook specifies that this type of content is not prohibited on their platform when it can be justified from a scientific point of view. Youtube, by contrast, potentially permits this type of content by virtue of all of the exemptions mentioned above.

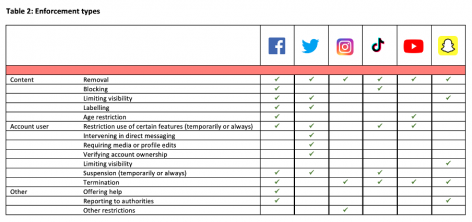

Thirdly, it often remains unclear what type of enforcement is used in which specific situation. Although the platforms do provide some insight into the array of enforcement types they could potentially employ, the CGs and ToUs do not provide enough details on which specific type of restriction will be adopted against a certain type of content. Consequently, a moderation practice such as the removal of content – which is named in all the CGs and ToUs analysed – may often be perceived by the user as unexpected and unfounded. An example is Instagram, which in their CG and ToU names only a few enforcement types. At the same time however, it also mentions that it uses ‘other restrictions’, without giving further clarification on what these restrictions might be or to what types of content they will be applied.

From this first analysis it has become clear that the platforms differ in their own sets of rules. As observed above, because the DSA only defines illegal content, platforms retain leeway when it comes to harmful content. In particular, there is not always a clear scope setting out which content falls within a category and how it is acted upon. Those elements combined could be very detrimental to the freedom of expression. We will discuss in our next blog post whether these differences in content moderation rules on paper also become apparent in practice. In order to do so, we will examine the actions that were being taken by the platforms against former President Trump in the days after the storming of the Capitol of January this year and also against the conspiracy theory on covid-19 called the ‘Plandemic’.

Stay tuned to see the theory put into practice in the second part of this blog series.

Britt van den Branden, Sophie Davidse and Eva Smit are students in the IViR research master in information law and in the “Glushko & Samuelson Information Law and Policy Lab” (ILPLab). For this ILPLab research project, they have worked in partnership with the DSA Observatory and with AWO data rights agency.